How Can AI Change Computerized System Validation?

By Kamila Novak, KAN Consulting, Maria Kellner, Virtua Pharma Technology, Paula Horowitz, AbbVie LLC, Richard Siconolfi, Richard M. Siconolfi LLC, Terry Katz, Daiichi Sankyo, and Sridevi Nagarajan, Ayusarogya Consultant

Computerized system validation (CSV) represents one of the most critical and resource-intensive processes in healthcare and life sciences organizations. For clinical leaders, the stakes are enormous, as inadequate validation can compromise patient safety, regulatory compliance, and organizational integrity. Traditional CSV methodologies remain labor-intensive, time-consuming, and open to human error. AI is poised to fundamentally reshape how organizations approach CSV, offering new pathways to enhance rigor while optimizing resource allocation and efficiency.

AI has already been successfully implemented in a growing number of areas across regulated product development, spanning drug discovery, preclinical modelling, and clinical trial design to clinical operations, risk management, medical imaging, safety monitoring, medical writing, data analytics, post-marketing activities, and more. Regulatory agencies and other organizations, such as the Organisation for Economic Co-operation and Development (OECD) and the International Organization for Standardization (ISO), acknowledge AI’s potential and work on guidelines and standards for its ethical and responsible use.

This paper explores how AI technologies are changing the CSV landscape and what clinical leaders, as well as other stakeholders, need to understand about this transformation.

Understanding CSV In The Clinical Context

CSV is the systematic process of establishing documented evidence that a computerized system reliably performs as intended. It is required for all systems used for regulated purposes and organizations’ decision-making. For clinical organizations, this applies to EHRs, laboratory information systems, pharmacy management systems, medical devices, EDC, and other software applications that directly or indirectly impact patient care. Many industry professionals perceive CSV as an essential but burdensome obligation: necessary for compliance but consuming significant capital, personnel time, and IT resources.

Computerized systems used for regulated purposes, such as pharmaceutical manufacturing, medical device development, and clinical trials, are typically categorized according to the Good Automated Manufacturing Practice (GAMP 5) guidelines1 published by the International Society for Pharmaceutical Engineering (ISPE). This guideline was developed for the regulated environment and is based on risk assessment. Most of these systems belong to the GAMP categories 3 (non-configurable), 4 (configurable), or 5 (custom, aka bespoke applications). Relevant validation complexity and risk increase from category 3 to category 5.

What Could AI Do In CSV?

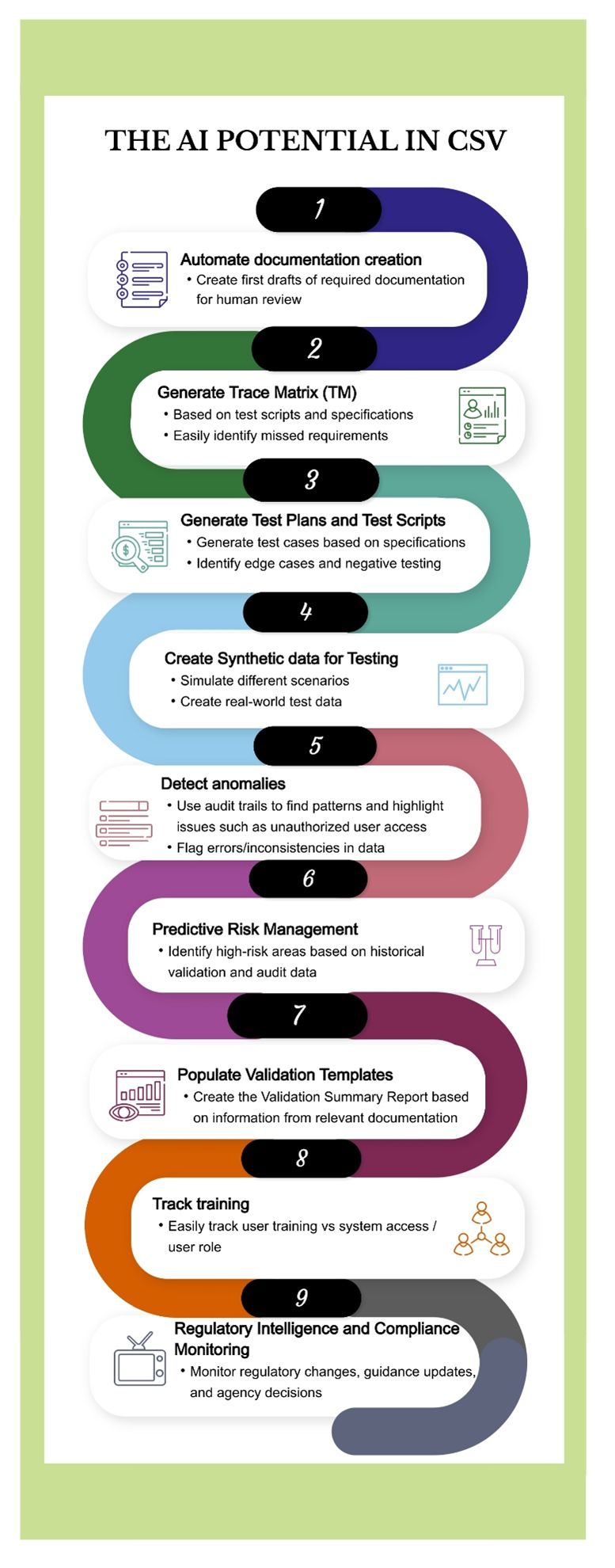

Our previous paper, titled "The Next AI Revolution: Computer System Validation"2, examined several promising applications of AI in CSV. The use cases illustrated in Figure 13 (originally presented as a poster and updated for this article] range from automated documentation to training tracking and currently exist at varying levels of maturity.

While some remain theoretical, others are undergoing pilot testing; however, none have yet achieved widespread operational adoption. As AI-enabled tools continue to advance, these use cases are poised to become standard components of CSV workflows, paving the path to additional applications.

Implementation Considerations

While AI potential in CSV is substantial, successful implementation requires a thoughtful strategy, starting with pilot projects rather than organization-wide transformation. Designing and implementing an AI system for CSV use requires validation of that AI tool, and SMEs involved in it should gain experience with pilot applications before expanding the scope. Maintaining human oversight and judgment is critical at every stage and must be reviewed and approved by qualified personnel. AI augments human expertise; it does not replace it. The SMEs and organizations’ decision makers should establish clear governance for the acceptability of AI-generated validation. Change management helps continue the improvement of such AI systems. Some people may be skeptical about AI-assisted approaches to CSV, so establishing clear communication about how AI improves their work by making tedious documentation faster, reducing human error, and allowing focus on higher-value analytical work may facilitate adoption. It’s also important to establish clear validation criteria for AI systems. Any AI tool that is used to support CSV must be validated. This requires an initial investment but yields long-term payoff as the validated AI system becomes a reusable asset. Establishing decision rights about AI recommendations, quality gates for AI-generated documentation, and escalation paths for situations where AI recommendations conflict with human judgment should be an essential part of the AI governance processes.

Benefits Related To AI Use In CSV

When thoughtfully implemented, AI-enhanced CSV can offer significant advantages:

- Accelerated timelines enable faster system implementation and security updates. This translates to a competitive advantage as well as reduced security exposure and vulnerability.

- Enhanced quality comes from more comprehensive testing, better risk assessment, and reduced human error in documentation. This strengthens regulatory compliance and patient safety.

- Resource optimization allows validation teams to accomplish more with existing staff, giving the SMEs space to focus on complex analytical and assessment work rather than routine documentation.

- Regulatory risk is reduced through more systematic traceability, comprehensive documentation, and alignment with evolving regulatory expectations.

- Continuous validation should happen throughout the system life cycle rather than as a one-time event. This maintains system integrity and catches problems or risks earlier.

Risks Related To AI Use In CSV

As with any other new technology, the implementation of AI in CSV comes with risks that, unless adequately mitigated, can turn into issues. For example, unreliable (i.e., insufficiently validated) AI tools that should track updates of applicable regulations, guidelines, and standards can generate inaccurate trace matrices, while others can wrongly assess the system risks, generate deficient or incomplete test cases, validation plans with omissions, validation summary reports with wrong conclusions, etc. CSV, including validation of AI tools for CSV, will always require human oversight, SMEs’ judgment, regulatory awareness, and deep technical understanding.

In addition, we should look at frameworks that help us safeguard against unintentional and intentional misuse or abuse. Some of today’s framework principles may remind us of the Three Laws of Robotics from the book I, Robot by Isaac Asimov4, namely “protect humans, obey orders, protect self.” The book tells the story of developing a human–AI relationship, including philosophical and ethical questions and dilemmas arising from the relationship.

In 2023 and 2025, the OECD and ISO, respectively, published nearly identical frameworks for ethical and responsible use of AI. The key principles5 include:

- Fairness: Data sets used for training the Al system must be given careful consideration to avoid discrimination.

- Transparency: Al systems should be designed in a way that allows users to understand how the algorithms work.

- Non-maleficence: Al systems should avoid harming individuals, society, or the environment.

- Accountability: Developers, organizations, and policymakers must ensure Al is developed and used responsibly.

- Privacy: Al must protect people's personal data, which involves developing mechanisms for individuals to control how their data is collected and used.

- Robustness: Al systems should be secure, i.e., resilient to errors, adversarial attacks, and unexpected inputs.

- Inclusiveness: Engaging with diverse perspectives helps identify potential ethical concerns of AI and ensures a collective effort to address them.

Summary

The convergence of AI capabilities with clinical and regulatory demands suggests that CSV transformation is not a distant prospect but an emerging reality. Leading regulated industries are already piloting AI-enhanced validation approaches. Decision makers who understand these developments and move strategically to implement appropriate AI tools will enhance organizational performance and competitive position.

The transition will not be instantaneous, as regulatory expectations will continue to evolve and human judgment will remain essential. But the direction is clear. AI is not replacing CSV; rather, it is making it more intelligent, comprehensive, efficient, and effective.

For clinical leaders seeking to modernize validation approaches while maintaining the rigor that patient safety and regulatory compliance demand, AI-enhanced CSV represents a strategic opportunity worth pursuing.

Conclusion

CSV will always require human judgment, regulatory awareness, and deep technical understanding. But the mechanical and tedious aspects of validation, such as test creation, execution, documentation, and traceability management, are increasingly augmented by AI technologies that work faster, more systematically, and with fewer errors than traditional manual approaches.

Decision makers and stakeholders who embrace this transformation thoughtfully with appropriate governance and quality oversight will strengthen their organizations' ability to deliver safe, effective, compliant regulated systems.

References:

- International Society for Pharmaceutical Engineering: GAMP 5, second edition

- Novak K, Kellner M, Horowitz P, Siconolfi R, Katz T, Nagarajan S: The Next AI Revolution: Computer System Validation, DIA Global Forum, November 2025

- Poster Revolutionizing Computer System Validation with Artificial Intelligence – A Risk-Based Approach presented by the DIA GCP & QA Community System Validation Working Group and AI in Healthcare Community at the DIA Global Annual Meeting 2025.presented at the DIA Global Annual Conference, June 2025

- Asimov A: I, Robot. Gnome Press, 1950

- ISO: Building a responsible Al – Principles, January 2025

Additional resources:

- GAMP Artificial Intelligence Guide, ISPE, 2025

- ISO 42001:2023 AI Management System

- OECD: AI Principles, 2024

About The Authors:

Kamila Novak, MSc in molecular genetics, has been involved in clinical research since 1995, working in various positions in pharma and CROs. Since 2010, she started working as a contractor and incorporated several years later. Her business activities include auditing, consulting for QMS establishment, clinical risk management, and process improvements, training, and medical writing. Kamila is a certified Lead Auditor for GDPR, six ISO standards and a certified auditor for two more. She is an active member of the DIA, the SQA the CDISC, the Florence Healthcare Site Enablement League, and other professional organisations. She publishes articles, speaks at webinars and conferences. She and her company actively support capacity building programs, access to healthcare and education in Africa.

Kamila Novak, MSc in molecular genetics, has been involved in clinical research since 1995, working in various positions in pharma and CROs. Since 2010, she started working as a contractor and incorporated several years later. Her business activities include auditing, consulting for QMS establishment, clinical risk management, and process improvements, training, and medical writing. Kamila is a certified Lead Auditor for GDPR, six ISO standards and a certified auditor for two more. She is an active member of the DIA, the SQA the CDISC, the Florence Healthcare Site Enablement League, and other professional organisations. She publishes articles, speaks at webinars and conferences. She and her company actively support capacity building programs, access to healthcare and education in Africa.

Maria Kellner has over 25 years of technology, validation, and process engineering experience in the Biopharmaceutical, CRO and software industries. She owns a consulting company, Virtua Pharma Technology, which is geared to help Biopharmaceutical companies and CROs implement and manage GXP software. She is fluent in all areas of R&D, including the software and processes needed to successfully help them function and flourish.

Maria Kellner has over 25 years of technology, validation, and process engineering experience in the Biopharmaceutical, CRO and software industries. She owns a consulting company, Virtua Pharma Technology, which is geared to help Biopharmaceutical companies and CROs implement and manage GXP software. She is fluent in all areas of R&D, including the software and processes needed to successfully help them function and flourish.

Paula Horowitz is a Quality, Project and Data Management professional with 30+ years of experience in Biopharmaceutical, CRO and academic environments. Currently, at AbbVie, Ms. Horowitz is a Program Manager, Software Validation within the QA organization. She provides QA risk-based guidance and support for computerized systems utilized in global GCP-regulated clinical trials. Prior to joining AbbVie, Ms. Horowitz led and collaborated with teams for strategic design and implementation of process improvements and initiatives, to ensure risk-based data integrity approaches

Paula Horowitz is a Quality, Project and Data Management professional with 30+ years of experience in Biopharmaceutical, CRO and academic environments. Currently, at AbbVie, Ms. Horowitz is a Program Manager, Software Validation within the QA organization. She provides QA risk-based guidance and support for computerized systems utilized in global GCP-regulated clinical trials. Prior to joining AbbVie, Ms. Horowitz led and collaborated with teams for strategic design and implementation of process improvements and initiatives, to ensure risk-based data integrity approaches

Richard Siconolfi earned a BS in Biology (Bethany College, Bethany, West Virginia) and MS degree in Toxicology (University of Cincinnati College of Medicine, Cincinnati, Ohio). He worked for The Standard Oil Co., Gulf Oil Co., Sherex Chemical Co., and the Procter & Gamble Co. Currently, he is a consultant in computer system validation, Part 11 Compliance, Data Integrity, and Software Vendor Audits (“The Validation Specialist”, Richard M Siconolfi, LLC). Richard is a co-founder of the Society of Quality Assurance and was elected president in 1990. He is a member of the Beyond Compliance Specialty Section, Computer Validation IT Compliance Specialty Section, and Program Committee. Also, he is a member of Research Quality Assurance’s IT Committee and the Drug Information Association GCP&QA Community. The Research Quality Assurance professional society appointed him a Fellow in 2014.

Richard Siconolfi earned a BS in Biology (Bethany College, Bethany, West Virginia) and MS degree in Toxicology (University of Cincinnati College of Medicine, Cincinnati, Ohio). He worked for The Standard Oil Co., Gulf Oil Co., Sherex Chemical Co., and the Procter & Gamble Co. Currently, he is a consultant in computer system validation, Part 11 Compliance, Data Integrity, and Software Vendor Audits (“The Validation Specialist”, Richard M Siconolfi, LLC). Richard is a co-founder of the Society of Quality Assurance and was elected president in 1990. He is a member of the Beyond Compliance Specialty Section, Computer Validation IT Compliance Specialty Section, and Program Committee. Also, he is a member of Research Quality Assurance’s IT Committee and the Drug Information Association GCP&QA Community. The Research Quality Assurance professional society appointed him a Fellow in 2014.

Terry Katz is an experienced Leader in Biostatistics, Statistical Programming and Data Management for pharmaceutical development. Most recently, he headed Biostatistics/DM Functional Excellence at Daiichi Sankyo emphasizing processes, compliance and CAPAs. Previously, he headed Biostatistics at Merck Animal Health and ImClone Systems, and was a statistical leader at PRA International and Schering Plough. He was an adjunct professor in Biostatistics and completed a 3-month pro bono fellowship in Kenya to improve capacity and capability for local hospitals to conduct clinical trials

Terry Katz is an experienced Leader in Biostatistics, Statistical Programming and Data Management for pharmaceutical development. Most recently, he headed Biostatistics/DM Functional Excellence at Daiichi Sankyo emphasizing processes, compliance and CAPAs. Previously, he headed Biostatistics at Merck Animal Health and ImClone Systems, and was a statistical leader at PRA International and Schering Plough. He was an adjunct professor in Biostatistics and completed a 3-month pro bono fellowship in Kenya to improve capacity and capability for local hospitals to conduct clinical trials

Sridevi Nagarajan is a Transformational R&D leader driving AI-enabled digital modernization and regulatory innovation across the pharma value chain, a strategic architect of enterprise frameworks and processes spanning regulatory affairs, CMC, clinical development, quality, and safety. She promotes global adoption to accelerate time-to-market and reduce development costs. She is considered a thought leader on AI applications in regulatory strategy and technology with health authorities' engagement resulting from her proven ability to translate business strategy into scalable operational capabilities that maximize R&D portfolio value, accelerate approvals, and create sustainable competitive advantage.

Sridevi Nagarajan is a Transformational R&D leader driving AI-enabled digital modernization and regulatory innovation across the pharma value chain, a strategic architect of enterprise frameworks and processes spanning regulatory affairs, CMC, clinical development, quality, and safety. She promotes global adoption to accelerate time-to-market and reduce development costs. She is considered a thought leader on AI applications in regulatory strategy and technology with health authorities' engagement resulting from her proven ability to translate business strategy into scalable operational capabilities that maximize R&D portfolio value, accelerate approvals, and create sustainable competitive advantage.